If you store customer or personal data on a virtual server, it will be important for you to back up the data at regular intervals. For various reasons, data may become unavailable or encounter problems. including accidental deletion, inappropriate settings, hacker intrusions, and software upgrades that change previous settings. Having a recent backup can make data recovery in these situations much easier.

Check needs

Data backups are not created equal for all systems. Before you make your first backup or plan to back it up at regular intervals, you should first find out exactly what tool is right for you.

What to back up?

What is on your server that would be difficult or impossible to replace if lost? Here are some examples to make predictions from the data.

- CMS websites: Data-driven websites such as those built on WordPress, Drupal, or Magento that use a database to store content.

- html websites: If you have a standard html website, it’s probably enough to just back up your public directory.

- Email: If you are using a virtual server as an email server, you should back up the raw email files.

- Multimedia files: Be sure to back up images, videos, and audio files.

- Customer data: Sales data and customer financial transactions are usually stored in a database. Therefore, you should definitely consider a backup database storage location.

- Custom setup: If your virtual server is particularly custom or takes a lot of time to set up, you should consider backing it up as a whole. Software settings are the minimum you should consider for data backup. Naturally, public content also includes this support.

Once you have identified the items to back up, locate them on the server. Note the specific paths and database of each item.

The type of support version you provide will also matter. Because the format affects what you can do with it. In this case, you should consider the data recovery conditions. Based on this, you will choose the right type of backup. There are two basic types of backups. File system backup and database dump.

System file backup

It will be useful to make a copy of all or part of the system files along with the structure and permissions for html files, software settings files, emails and multimedia files. If you later want to restore the copied system files to the virtual server, they will have the same functionality as before. A full-server snapshot is a comprehensive file system backup that captures all the characteristics of your server up to a specific point in time. If you back up files without their permissions, you will prolong the recovery process.

Database dump

Filesystem backups are not always the best choice for backing up data. A full server backup can restore your database, but be aware that the raw database files in the backup remain virtually unused. Accordingly, running a SQL dump or something like that will work better. In this case, you get a readable file of SQL commands that can be easily transferred to another server and another database of the same type.

Finally, select the backup type, which can be a filesystem dump, a database dump, or both. If both of them are intended to be used, a database dump must be prepared first, and then this dump file can be saved as part of the file system backup.

Time to back up data

The next thing to consider is the time intervals you want to back up your data. Your decision will depend on how often changes are made to your server and how critical these changes are to you. Here are some common time frames.

- Online store: at least daily.

- News site or blog: as often as you update.

- Development Server: When you make changes.

- Game server: at least daily.

- Static sites with fixed content: every 6 months or after any major changes.

- Email server: at least daily.

Your needs in this field will make you perform backup manually or automatically.

Where to store backups?

Now you need to think about where to store the data after it is backed up. Here are some of the most common backup storage locations.

- Same Server: This is the easiest place you can use to store backups. But keep in mind that if your server is attacked at the root level or your data is accidentally deleted, your backup copy will also be unavailable.

- Another server: You can store your backup data on another server. This is one of the safest solutions in this field.

- Personal device: You can back up your desktop computer to a portable hard drive. However, your home office cannot be as secure as a professional data center. You should also consider the hardware quality of your hard drive.

You should also consider how many backups will fit on your storage platform. Many people want to have at least two backups (an older, reliable version, and the latest version) or even all versions. The more of these backups you can have, the better off you will be; Of course, as far as the capacity of your storage space allows.

Backup circulation

Finally, you need to decide how many backups you want to keep and how many to keep at a time. Of course, having a backup is better than no backup at all. However, most people want to have at least two backups. For example, if you replace backups daily and don’t keep any older versions, you’ll have no chance if you notice a hack in the week before. The safest option is to store backups at consecutive intervals without overwriting each other as much as possible. You just have to be careful that the size of the data backup storage does not exceed the amount of memory you have available for this purpose. Also, a variety of backups that include compression and other optimizations will make things easier for you.

Choosing the right tool for data backup

Once you have a good understanding of your backup needs, you need to choose a suitable tool for this purpose. At this point, you should have a good understanding of the following.

- What files and databases you want to back up.

- When do you want to make new backups?

- Where do you want to store your backups?

- How many of your old backups you want to keep as files.

In this tutorial, we will examine 4 different tools for data backup and see how these tools meet the criteria.

Rsync

Rsync is a free file copy tool that we recommend using. This tool is considered one of the best tools for data backup for a reason. Including:

- Simple settings. Meanwhile, many advanced settings are also available.

- Easy settings to do things automatically. Rsync commands can be configured as cron jobs.

- high efficiency. Rsync only updates files that have changed; A topic that saves time and disk space.

Meanwhile, you need to master the basic level of working with the command line to perform backups and restore files.

- Backup Data: You specify the path for this filesystem backup.

- Backup time: The base command is manual. At the same time, you can set it to run automatically. Next, we will learn how to set up Rsync for daily backups.

- Storage location: You specify the destination. You can save in another folder on the server, another Linux server or your home computer. As long as you can SSH between the two systems and both can run Rsync, you can store your backup anywhere.

- Circulation of Versions: Basic circulation is manual. However, if you make the right settings, you can store all the older backups in a small space.

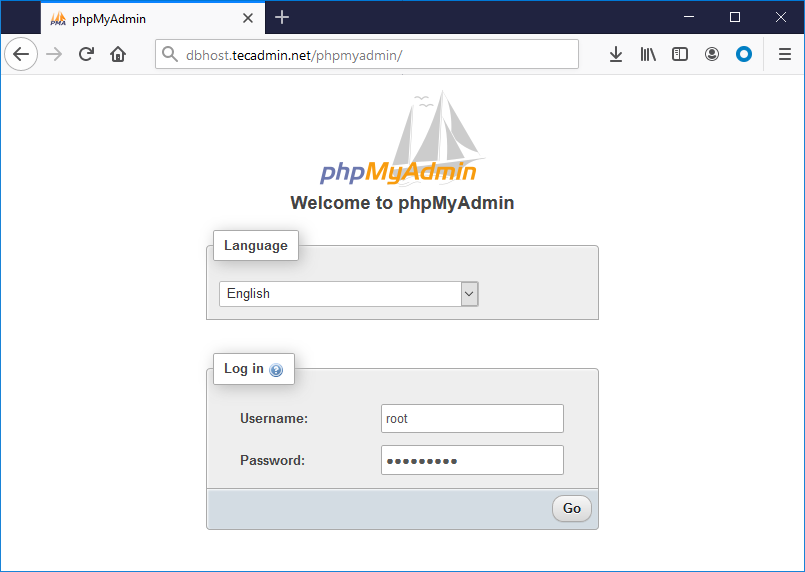

MySQL backups

Data stored in a database can change rapidly. Running a MySQL dump is probably the best way to back up a database. If you only take a snapshot or any other backup that copies your files, the raw database files will remain intact and should be properly exploited during a full server recovery. But maybe you don’t expect such a thing.

- Backup data: databases and tables

- Backup time: The base command is manual. At the same time, you can set it to run automatically.

- Storage location: By default, the backup file is downloaded on the storage server or on your home computer. In any case, it will be possible to change the storage location of this file.

- Circulation of Versions: Basic circulation is manual.

Follow these instructions to make readable backups of databases and move them to a new database server.

Tar

The Tar tool can copy and compress your files and store them in a small backup file on the server. As a result, you will have the following advantages.

- Save system disk space.

- Reducing backup data transfer volume if using remote storage.

- Ease of working with the backup copy due to the existence of a separate file.

Also note that you need to unzip the backup file to use it for recovery. You cannot easily open it and search its folders.

- Backup Data: You specify the path for this filesystem backup.

- Backup time: The base command is manual. At the same time, you can set it to run automatically.

- Storage location: By default, the backup file is stored on the server. If you want to save it somewhere else, you have to do it manually.

- Circulation of Versions: Basic circulation is manual. The compact nature of backup files makes it easier to maintain multiple copies of them.

Here is the basic tar command.

|

1

|

tar pczvf my_backup_file.tar.gz /path/to/source/content

|

Explanation of the options in the tar command

p or –preserve-permissions: Preserve permissions

c or –create: Create a new archive

z or –gzip: Compress the archive with gzip

v or –verbose: Show files being processed

f or –file=ARCHIVE: The next argument will be the name of the new archive

Rdiff-backup

Rdiff-backup is a tool designed to perform “staggered” backups. As its website explains, the idea behind this tool is to combine the best features of mirrored and staggered backups. You end up with a copy of your system files that you can still access older files in.

- Backup Data: You specify the path for this filesystem backup.

- Backup time: The base command is manual. At the same time, you can set it to run automatically.

- Storage location: You specify the destination. You can save in another folder on the server, another Linux server or your home computer.

- Version rotation: All old and new backups are kept.

Manual backup with Rsync

In this part of the tutorial, we will discuss how to use Rsync in the form of an example. For other tools introduced above, the working steps are similar. This backup is done manually and once. The files are saved on the system where you run the command. So make sure you are logged in to the computer or server where you want to save the backup.

Here, we refer to the server where you want to back up the data as production_server, the server or computer where you want to store the backups as backup_server or personal_computer. Examples will be for production_server based on Ubuntu 12.04 LTS and various types of servers and PCs.

Follow the steps below to manually back up the server.

1) Install Rsync on backup_server and your server using the following command.

|

1

|

sudo apt-get install rsync

|

2) Now run the rsync command through backup_server or personal_computer.

|

1

|

rsync -ahvz user@production_server:/path/to/source/content /path/to/local/backup/storage/

|

3) When the corresponding message appears, enter the SSH password for production_server. Here you can see the list of files that will be copied. Finally, you should see a confirmation message similar to the one below.

|

1

2

3

|

sent 100 bytes received 2.76K bytes 1.90K bytes/sec

total size is 20.73K speedup is 7.26

|

simply! You can double-click on the folder you assigned to your local storage to verify the correctness of the copied data. In the next parts of this tutorial, we will discuss how to automate data backup.

Setting up automatic backups on a Linux server

In this section, we will use the Rsync tool to perform daily backups. We will save these backups in separate folders with the title of different days. In this case, you will only need a little more space from the production server. Because you save similar files as “hard links” and not as separate files. Of course, if you have large files that change constantly, you will need more space.

This process is ideal for those who want to automatically store their backups on another Linux server. In fact, this is the easiest and safest way to do this. It can also be used to back up a PC. For example, whenever the computer starts up, the data backup will start.

- Backup Data: You specify the path for the filesystem backup.

- Backup Time: This is an automatic daily backup.

- Storage Location: The files are stored on the system where you run the command. So be sure to log in first to the server or computer you want to save the backups to.

- Version rotation: All old backups are kept. Disk space is optimized by using hardlinks to create similar files.

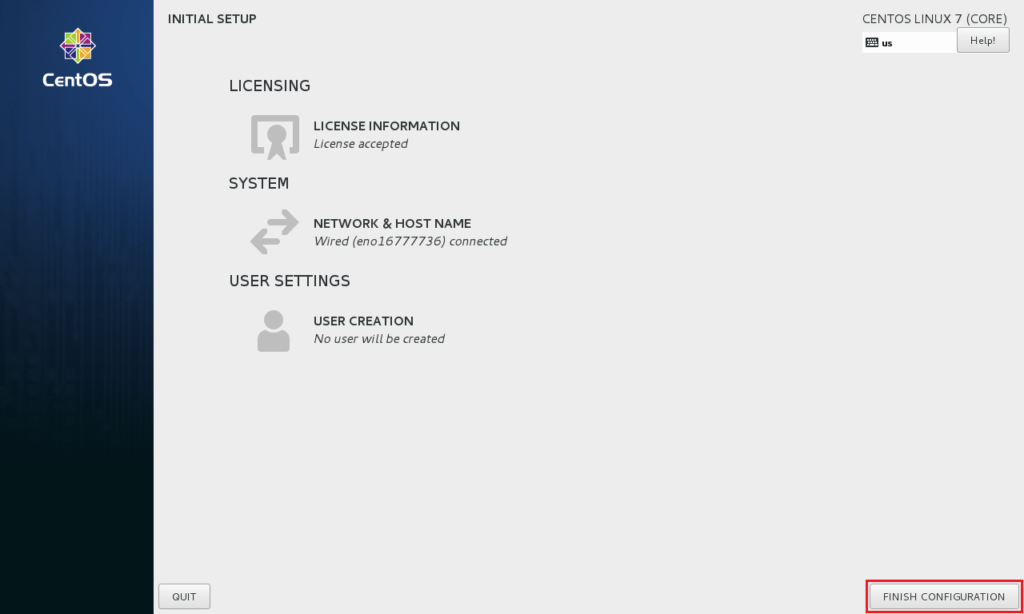

Follow the steps below to set up automatic data backup to a Linux server.

1) Install rsync on both servers using the following command.

2) On backup_server, create an SSH key without a password using the following command. When prompted for a password, press Return. Do not enter any password.

3) Copy the public key to production_server via backup_server. This is done according to the following commands.

|

1

2

3

|

scp ~/.ssh/id_rsa.pub user@production_server:~/.ssh/uploaded_key.pub

'ssh user@production_server 'echo `cat ~/.ssh/uploaded_key.pub` >> ~/.ssh/authorized_keys

|

4) Try to connect to production_server through backup_server by running the following command.

|

1

|

ssh user@production_server 'ls -al'

|

5) Create a directory to store backups in backup_server.

6) Now create a manual backup of the data and save it to ~/backups/public_orig/. This is the version against which all future backups are checked. From backup_server, enter the following command.

|

1

|

rsync -ahvz user@production_server:~/public ~/backups/public_orig/

|

Now you should see a set of folders. You will also see a confirmation message similar to the one below.

|

1

2

3

|

sent 100 bytes received 2.76K bytes 1.90K bytes/sec

total size is 20.73K speedup is 7.26

|

7) Now you need to create a command to backup data automatically and scheduled. Below is an example of this command that you can modify to suit your needs. Run the following command manually from backup_server to avoid any errors.

|

1

|

rsync -ahvz --delete --link-dest=~/backups/public_orig user@production_server:~/public ~/backups/public_$(date -I)

|

8) The output should look something like what was previously produced in step 6. Be sure to check the ~/backups/ folder to make sure you did it correctly.

9) Add the command to cron to run automatically every day. Edit the cron file in backup_server using the following command.

Tip: If this is your first time running this command, choose your favorite text editor.

10) Add the following line to the end of the file. This is the same line that was added in step 7 with the same cron information as in the beginning. Using this command, cron will automatically run rsync to back up the data on the top server at 3am.

|

1

|

0 3 * * * rsync -ahvz --delete --link-dest=~/backups/public_orig user@production_server:~/public ~/backups/public_$(date -I)

|

Backup is now set for you automatically. If anything goes wrong with the server, you can always restore it from a backup.

Set up data backup to a desktop computer

Now that you know how to back up from one server to another Linux server, it’s time to back up to the desktop computer. There are several reasons for doing this. One of the reasons is that it is cheap. You can store everything on your home computer without paying for two virtual servers. Also, this option will be very useful for those who want to have their own development environment.

- Backup Data: You specify the path for the filesystem backup.

- Backup Time: This is an automatic daily backup.

- Storage Location: The files are stored on the system where you run the command. So be sure to log in to the computer you want to save the backups to first. In this section, data backup is supposed to be done on the desktop computer.

- Version rotation: All old backups are kept. Disk space is optimized by using hardlinks to create similar files.

Make sure rsync is installed on your desktop computer. Linux users can also run one of the following commands.

|

1

2

3

|

sudo apt-get install rsync

sudo yum install rsync

|

Also, Mac OS X has rsync installed by default.

Linux

Linux users should follow the instructions earlier in the section on setting up automatic backups to a Linux server.

Mac OS X operating system

OS X users can also refer to the guide for setting up automatic backup to a Linux server in the previous sections. The only difference in this field is the lack of rsync installation and the need to change the date variable. The final rsync command in step 7 will look something like below.

|

1

|

rsync -ahvz --delete --link-dest=~/backups/public_orig user@production_server:~/public ~/backups/public_$(date +%Y-%m-%d)

|

The last crontab entry in step 9 is also similar to below.

|

1

|

0 3 * * * rsync -ahvz --delete --link-dest=~/backups/public_orig user@production_server:~/public ~/backups/public_$(date +\%Y-\%m-\%d)

|

Note: If you get a permission error message in cron settings and this error did not occur when you entered the command manually, you probably have a password on the SSH key that is not working normally. Because you saved it to your Mac OS X keychain. To fix this, you need to create a new OS X user with a passwordless key.

Windows

The situation is slightly different in Windows. Here you need to install a set of different tools. While these tools are available by default in other systems. Also, remember that Windows does not own Linux files and permissions. Therefore, you will need to do some additional work to restore ownership and permissions from backups.

Follow the steps below to set up automatic data backup from the server to a Windows desktop computer.

1) Install the cwRsync program. You can get the latest version for free here. Be careful not to select the server version.

2) The SSH key must be executed by the same user as the cwRsync program. So, navigate to the directory where cwRsync is installed. This is possible through the Windows command window. for example:

|

1

|

cd C:\Program Files (x86)\cwRsync\bin

|

3) Now generate an SSH key for your computer.

4) Here you have to specify a proper file path to store the key. The default path will not work for you. For example, you can use the following path. Of course, make sure that the directories already exist.

|

1

|

C:\Users\user\.ssh\id_rsa

|

5) When the password prompt appears, just press the Return key. Now you should see the generated public and private keys in the directory you specified.

6) Now it’s time to send public keys to the server. Of course, you can use your favorite method to send the file, but here we use PSCP. PSCP is another program in the PuTTY family that allows you to use scp and you can get it here.

7) In the next step, you add PSCP and cwRsync to the environment address so that they can be used directly from the command line. The work steps in Windows 7 and 8 will be as follows.

- From the Start menu, open Control Panel.

- Select the “System and Security” option.

- Now select System.

- From the left bar, click Advanced system settings.

- Go to the Advanced tab.

- Click the “Environment Variables…” button.

- In the System variables section, scroll down to see the “Path variable” option. Activate it and click on Edit…

- Do not delete anything. You want to add something here.

- Add paths to bin directory for exe and cwRsync. Separate paths with a semicolon (;). You can see an example of this below.

|

1

|

C:\Program Files (x86)\PuTTY;C:\Program Files (x86)\cwRsync\bin;

|

- Click OK to return to the Control Panel.

- Run the command window again if you have it open.

8) Use PSCP to send the key. In the Windows command window, enter the following command.

|

1

|

pscp -scp C:\Users\user\.ssh\id_rsa.pub user@production_server:/home/user/.ssh/uploaded_key.pub

|

9) On the production_server, attach the new key to the authorized_keys file using the following command.

|

1

|

echo `cat ~/.ssh/uploaded_key.pub` >> ~/.ssh/authorized_keys

|

10) Create a directory in the Windows system to save data backup.

11) Create a manual backup by saving to C:\Users\user\backups\public_orig\. This is the version against which all future backups are checked. From the Windows system, type the following command.

|

1

|

rsync -hrtvz --chmod u+rwx user@production_server:~/public /cygdrive/c/Users/user/backups/public_orig/

|

Note that these commands are Linux-style, even on Windows.

|

1

|

یعنی C:\Users\user\backups\public_orig\ تبدیل به /cygdrive/c/Users/user/backups/public_orig/میشود.

|

This time you will be prompted to enter the production_server password. As a result, you should see a confirmation message as below.

|

1

2

3

|

sent 100 bytes received 2.76K bytes 1.90K bytes/sec

total size is 20.73K speedup is 7.26

|

In the meantime, you can check the contents of the %HOMEPATH\backups\public_orig\ directory with the dir command to make sure everything is copied.

12) Add the latest version of the command to the cwrsync.cmd file and run it manually once to check the performance. Then set it to run automatically.

- From the Start menu, in the All Programs section, open the cwRsync folder.

- Right click on Batch example and select “Run as administrator” option.

- As a result, the cmd file will be opened for editing.

- Do not change any of the default settings.

- At the bottom of this file, add the following line.

|

1

|

rsync -hrtvz --chmod u+rwx --delete --link-dest=/cygdrive/c/Users/user/backups/public_orig user@production_server:~/public /cygdrive/c/Users/user/backups/public_%DATE:~10,4%-%DATE:~4,2%-%DATE:~7,2%

|

- Save the file.

- Now run the file via command line.

|

1

|

"C:\Program Files (x86)\cwRsync\cwrsync.cmd"

|

This results in today’s backup and the right environment for connecting a passwordless SSH key.

- As a result, you should see an output similar to step 11.

13) Finally, add cwrsync.cmd as a daily task in the Task Scheduler. for this purpose:

- From the Start menu, go to All Programs > Accessories > System Tools > Task Scheduler.

- On the Create Basic Task… button. Click to open the Task Wizard window.

- Complete the title and description. For example: “rsync backups”.

- Select “Daily” from the drop-down list.

- Choose the start day today and the backup time so that your server is free or your computer is on.

- Select the “Start a program” option.

- In the Program/script field, enter the following address.

|

1

|

"C:\Program Files (x86)\cwRsync\cwrsync.cmd"

|

Keep in mind that these backups use up your dedicated bandwidth, and doing too many of them can cost you extra.

Now data backup is done daily and automatically for your server. As a result, any problem that happened to the server, you can restore it to the right point in time.

Restore Rsync backup

In this section we will look at how to use Rsync to restore a server from a backup. for this purpose:

- Navigate to the backups directory on your backup_server or desktop.

- Locate the folder with the appropriate date.

- You have to choose whether you want to perform a general recovery or only some specific files.

- Transfer the selected files via scp to the production_server with a tool like SFTP or rsync.

- For Windows users, it should be said that proper Linux file ownership and permissions should be selected.

Maintenance of backup copies

Even if data backup is set to be automatic and properly configured, monitoring and maintaining backup copies will be important for you. As a result, possible surprises are avoided and the backup process is facilitated.

- To back up data to a remote server or desktop (using rsync or any other tool) you should check their volume against your monthly traffic. Be aware of the amount of bandwidth usage so as not to incur additional costs.

- To set another email to the cron job, add the following line to the top of the list of jobs in the cron file.

- If you want to remove notification emails from cron jobs, add this line.

- Make sure that your backup server has enough disk space. Sometimes it is necessary to delete previous backups. If you use rsync backup for this purpose and have large files that change frequently, your server will fill up quickly. If necessary, you can set automatic deletion of backups.

- If you are using automatic rsync backups, you may need to change the –link-dest folder in the cron command to the new backup folder. Because you’ve probably made a lot of changes since the original backup. This saves time and disk space.

Concepts of the Rsync command

Although Rsync is a powerful tool, its array of options can be confusing. If you want to run this command in a more customized way or if you encounter errors, stay with us in this section. The basic command mentioned earlier is as follows:

rsync -ahvz user@production_server:/path/to/source/content /path/to/local/backup/storage/

rsync

A basic rsync command follows the following format.

You put the file or directory you want to back up in copyfrom and copyto is where you want to save the backup data. copyfrom and copyto are two necessary arguments for the rsync command. Below is an example of a basic rsync command with these two arguments.

|

1

2

3

4

5

6

7

8

9

|

rsync user@production_server:~/public ~/backups/mybackup

|---| |-----------------------------| |----------------|

^ ^ ^

| | |

rsync copyfrom copyto

|

At the same time, there are other options for running the Rsync command. These options must be placed before the main arguments of the command.

|

1

|

rsync --options copyfrom copyto

|

-ahvz

Here are some standard rsync command options.

These are 4 rsync options rolled into one statement. Of course, you can use them separately as below.

These options will have the following effects.

-a or –archive: Maintain ownership and permissions of files, reverse copies, etc.

-h or –human-readable: Number of human-readable outputs

-v or –verbose: Show more output

-z or –compress: Compress data files during transfer

You can add or subtract any rsync options. For example, if you don’t want to see all the output, you’d use the option below.

When making a backup, the main option is –a or –archive.

Source location

The copyfrom location is the address you choose to back up the data on production_server. Here you need to put the path of the server content files.

|

1

2

3

4

5

6

7

8

9

|

user@production_server:~/public

|--------------------| |------|

^ ^

| |

SSH login path

|

Because you want to copy from a remote server (production_server), you must first provide SSH access. Then, after the quotation marks (:), enter the exact address of the folder you want to back up.

In this example, you are backing up the ~/public directory. This is the directory where your websites will be placed. Note that the ~ symbol stands for /home/user/. The / sign is also for backing up the public folder and not just its contents.

If you want to back up the root and the entire server, you should use the /* path. You should also delete some folders so that you don’t encounter many messages and warnings during data backup. For example, /dev, /proc, /sys, /tmp, and /run do not have permanent content, and /mnt is a mount point for other filesystems. To exclude an item from the rsync command, use the –exclude option at the end of the command.

|

1

|

--exclude={/dev/*,/proc/*,/sys/*,/tmp/*,/run/*,/mnt/*}

|

You will also need to use a user with root or sudo access to back up data to /* or higher level directories. If you are using the sudo user, you must either disable the password request or send it to the server.

Destination location

The copyto location is the path where you want to save the backup data on backup_server.

In the automatic backup command, a date variable is appended to the file path.

|

1

2

3

4

5

6

7

8

9

10

11

|

~/backups/public_$(date -I)

|--------| <- date variable

|-------------------------|

^

|

path

|

This is the local file path in backup_server and is used to store backups. The $(date -I) variable uses the built-in date operator to add the current date to the end of the file path. As a result, a new folder is created for each of the backup copies and they can be easily found in the storage location.

Cron

In addition to doing the previous example, the following command adds some other advanced options.

|

1

|

0 3 * * * rsync -ahvz --delete --link-dest=~/backups/public_orig user@production_server:~/public ~/backups/public_$(date -I)

|

The series of numbers and asterisks indicate when the cron command should be executed. Note that this command has already been added to the crontab file.

|

1

2

3

4

5

6

7

|

0 3 * * * Command

^ ^ ^ ^ ^ ^

| | | | | |

Minute Hour Day of Month Month Weekday Shell command

|

For each of the five time categories (minutes, hours, days of the month, months, days of the week) you can use a special number or *. The sign * will mean “every”. In addition, you must use 24-hour numbers for the time variable. The example above specifies that the command should be executed at the first minute of the third hour of each day. Anything you add after the fifth number or asterisk is considered the main command. This command will be executed if you type it in the shell.

To test the execution of this command, you can set the cron command as * * * * *. In this mode, a new backup is created every minute. Backing up data like this can completely consume your traffic. Therefore, try to pay attention to the costs of doing this.

-delete

–delete is another option for the rsync command.

Using the –delete option, if the file has been deleted from your copyfrom location, it will not be included in the last copyfrom backup; Even if it exists in older backups. Of course, the file is not deleted from older versions. This will make it easier to access and find backups.

–link-dest

This is an option that increases the performance of older rsync backups.

|

1

|

--link-dest=~/backups/public_orig

|

–link-dest is another rsync command option that is important for staggered data backup plans. This option allows us to choose different names for each backup folder. We can also use this option to save several full backup copies without occupying a lot of disk space.

The specific argument of the –link-dest option will be as follows.

|

1

|

--link-dest=comparison_backup_folder

|

You can put anything instead of comparison_backup_folder. The more it resembles a personal (production) environment, the more optimal rsync will work.

The / is removed to match the destination path or copyto.

Different server locations

In this tutorial, we used a remote server named production_server and a local server named backup_server. However, rsync can also work for a local production_server and a remote backup_server. In this case, local backups can be saved to another folder on the same system or another remote server. Any remote server you want to use in this context will require an SSH login before entering the file path.

Running the rsync command from the backup server is called a “pull” backup, and from the local server is called a “push” backup. Local folders do not require SSH access. If SSH login and then quotation mark before the file path is required for remote folders. You can see other examples of the rsync command below.

|

1

2

3

4

5

6

7

8

9

|

rsync copyfrom copyto

rsync /local1 /local2/

rsync /local user@remote:/remote/

rsync user@remote:/remote /local/

rsync user@remote1:/remote user@remote2:/remote/

|

rsync must be installed on all servers. Also note that each remote server must have an SSH server running.

Conclusion

Data backup can be vital to restore servers in the face of various problems. We hope that this relatively long tutorial was useful for you. Keep in mind that the content in this field can be more extensive than this and you may need to update your information from time to time. For example, the help page of the rsynce command is always at your disposal.